Assets Server is a dynamically scalable system with a high level of built-in availability, redundancy and load balancing.

The Assets Server structure consists of a cluster of one or more nodes, each with the ability to take on a specific role such as that of a search engine for searching and indexing, a processing engine for processing files and generating previews, or a job scheduler for running scheduled jobs.

Note: Technically, each node is the same and each is installed by using the same installer. Its role is determined by changing the configuration during the installation.

The structure comes with the following advantages:

- Each search node holds part of the index

- All nodes share the search load

- Clients talk to any Web API node, load is balanced

- All nodes have direct access to the storage

- Multiple nodes can be set up with different roles

- Scaling is done horizontally, allowing storage of billions of assets

Search engine

The search engine that is used in Assets Server is that of elasticsearch. It takes care of the horizontal scaling, searching and indexing.

Communication between nodes

The communication between nodes is done by making use of Hazelcast technology.

Cluster examples

Because of its distributed nature, possible clusters range from including just a single node to including as many nodes as needed to support millions or billions of files.

Note: A single node can typically already support a few million files.

This article outlines some typical clusters.

|

Notes about the examples:

|

The following table provides a summary:

| Single machine | Small cluster | Medium cluster | Big cluster | |

|---|---|---|---|---|

| Number of nodes | 1 | 3 | 5 | Dozens |

| Maximum number of concurrent users | 10 – 20 | 20 – 40 | 50 – 100 | 5,000+ |

| Maximum number of assets | 5 million | 5 million | 50 million | 1 billion |

| Daily asset intake | Low volume | Medium volume | Medium volume | High volume |

| Data redundancy? | No | No 1 | 1 Search node is allowed to fail without data loss 2 | Several Search nodes are allowed to fail without data loss 1 |

| Processing redundancy? | No | 1 processing node is allowed to fail | 1 Processing node is allowed to temporarily fail | Several Processing nodes are allowed to fail 3 |

1 It is assumed here that the 3-node cluster consists of 1 search node and 2 processing nodes.

2 It is assumed here that the 5-node cluster consists of 3 search nodes and 2 processing nodes.

3 When several nodes fail, performance will be affected; a new node is expected to take the place of a failed node.

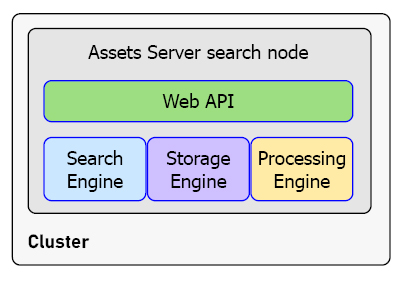

A single server of 1 node

In this cluster, Assets Server is installed on a single machine and therefore takes on all roles.

This cluster is not redundant: when the machine is down, Assets Server is not available.

Use this cluster for a maximum number of 10 – 20 users and for handling up to 5 million assets.

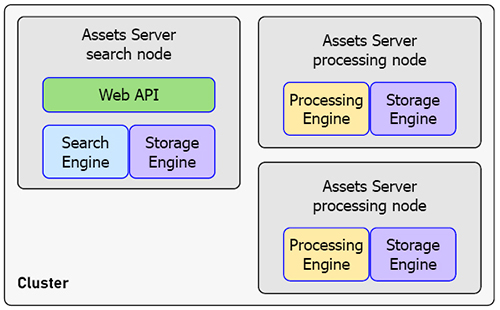

A small cluster of 3 nodes

In this cluster, 1 Assets Server installation is used as a Search node while the load of processing files and generating previews is balanced over 2 separate Assets Server installations that act as Processing nodes.

This cluster is semi-redundant: 1 Processing node is allowed to fail. However, make sure that it is brought back up (or replaced) as soon as possible.

Use this cluster for a maximum number of 20 – 40 users and for handling up to 5 million assets.

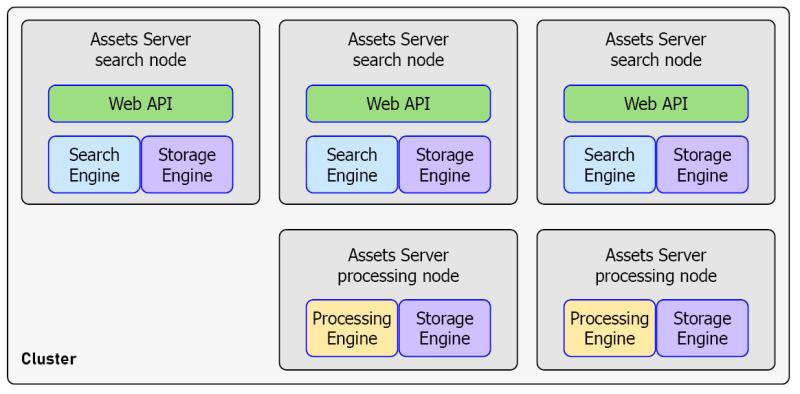

A medium cluster of 5 nodes

This is a typical cluster and consists of 3 Search nodes and 2 Processing nodes.

It can be expanded as needed by adding more Search nodes or Processing nodes. There does not necessarily have to be a constant factor between the number of nodes.

This cluster is fully redundant and resilient: when the cluster contains at least 3 Search nodes, Assets Server will automatically enable 1 replica shard for each index.

If 1 of the Search nodes goes down, the other 2 will keep running and all data will still be available. However, make sure that the other Search node is brought back up (or replaced) as soon as possible. When one of the Processing nodes dies the other one will take on all processing. Again make sure to replace the broken Processing node.

Note: To be completely resilient, a load balancer needs to be added in front of the Assets Server nodes that need to be used as web API front-ends. This also helps to spread the load when many concurrent users are using the system.

Use this cluster for a maximum number of 50 – 100 users and for handling up to 50 million assets.

Large clusters

Large clusters typically involve adding load balancers and dividing clusters over multiple data centers.

For implementing such large clusters, please contact WoodWing Support to discuss the expectations, setup, and planning.

Comments

0 comments

Please sign in to leave a comment.