Introduction

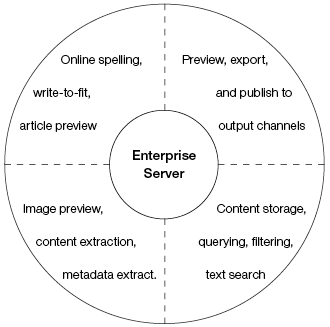

Enterprise Server integrates with various 3rd-party systems and components, all requiring their own resources (such as memory, CPU power, storage and so on). The following figure shows how all this can be categorized in four functional areas, called features.

Figure: Enterprise Server features categorized

The characteristics of resource consumption varies, depending on which features are heavily used during production. Determining when to offload a particular feature to another physical box is sometimes obvious or even predetermined by limitations of for instance the chosen operating system or database. But it could also happen that bottlenecks appear as an unforeseen ‘surprise’ during production. This could happen right after a clean installation or much later after changing the configuration, the way of working, adding another editorial department, etc.

Some features are technically bound to each other. When that is the case, offloading one feature implies that the other needs to move to or be replicated on another physical box as well.

A physical box could be any of the following:

- A physical machine

- A virtual image, such as VM Ware

- A cloud instance, such as Amazon EC2

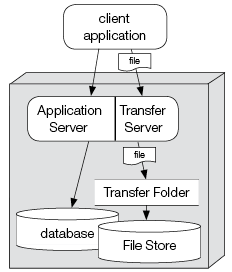

In this article, a physical box is illustrated as follows:

Enterprise architecture

Enterprise has a 3-tier architecture. The clients can be deployed as much as there are workstations needed. To deal with the load of all those clients, deployment is more a challenge for the back-end than for the front-end. This article focuses therefore on the back-end, with Enterprise Server representing the ‘heart’. A deployment is successful when the heart has a healthy beat during peak hours, serving all clients with expected responsiveness.

Note: Due to the many variation in configuration and infrastructures, the many ways the system is used and the fast evolution of hardware, it is impossible to suggest the best choice or to give absolute numbers. That kind of information is therefore not provided in this article. Instead, it explains how a bare Enterprise Server can be scaled up, what 3rd-party features it integrates and how those features can be scaled up as well. With this insight you can avoid problems, find bottlenecks and improve your deployments suiting your customer’s situation and requirements.

3rd-Party integration

Enterprise Server integrates with many 3rd-party products of which most are show in the figure below.

Figure: A representation of how 3rd-party applications are integrated in Enterprise Server

Those features are integrated for a reason; in most cases they do a complicated or heavy job. That implies memory and processing power consumption. This might cause expensive memory swapping or intensive processing power usage with a negative impact for other processes serving other users. When many users would work on such a server, one user could cause another user to wait. As a result, the end-user waiting times become unpredictable.

Whether that is acceptable or not highly depends on the user’s operation. For example, the user generating a preview expects this process to take a few seconds. But another user saving a small article does not expect to have to wait at all. However, when the preview consumes all resources, the application server might get swapped out of memory and so its responsiveness drops considerably. As a result, the user saving the article starts wondering why the operation takes a few seconds, while the previous save operation took less that a second.

In terms of user experience, an unpredictable server is probably even more annoying than a server that is consequently relatively slow. The heavy duty operations are the first candidates to consider moving away from the application server and onto another physical box. This makes light-weighted operations more predictable and as a result improves the user experience.

Deployment Basics

A fully functional Enterprise Server can run in just one physical box. This can be useful for demo and testing purposes or for production with a very limited number of users. In this article we will start with a small and simple setup and we will subsequently show how to scale it up in small steps.

Let’s take the minimum set of features and put them all in one box. Enterprise Server needs:

- One database

- One File Store

- One File Transfer Server (a method of sending files separately from the workflow operational data, thereby greatly increasing performance)

Enterprise Server can play the role of an Application Server as well as the role of a File Transfer Server; this means that only one instance of Enterprise Server is required.

During log-on, the Enterprise Server tells the client applications who acts as the Transfer Server: the Enterprise Server instance itself (being logged-on to), or any other server in the server farm.

The client maintains 2 HTTP connections; one to the Enterprise Server and one to the Transfer Server. With the Enterprise Server, lightweight workflow operation data travels back and forth. With the Transfer Server, files are uploaded and downloaded. This flow is illustrated in the figure below and also shows that all can fit in one box.

Figure: A simple setup of Enterprise Server containing one Enterprise Server, one database and one File Store.

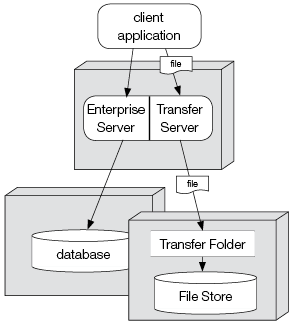

Enterprise Server needs exactly one File Store and one database. Therefore, when you need more capacity than one single box, the first thing to do is moving out the File Store and database, as shown in the following figure.

Figure: A setup in which the database and File Store are running on different machines.

It is important to strive for a lightweighted Enterprise Server because that leads to a predictable performance of workflow operations, an efficient production and a responsive system. When many users are uploading or downloading large files, other users might suffer from long or random waiting times.

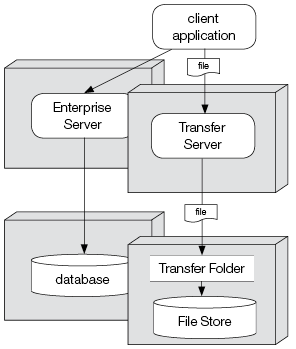

When this is happening, the file transfers can be offloaded by giving the Transfer Server its own physical box. Then, the files no longer travel through the Enterprise Server, as shown in the following figure.

Figure: A setup in which the File Transfer Server has been moved to a separate machine.

Within one box, there can be many processes running instances of Enterprise Server or File Transfer Server, each serving one client application request. Some user operations result in many requests running in parallel. For each request there is an Enterprise Server or File Transfer Server process working.

Obviously, more memory and/or more processors in one box means more capacity, but those resources are finite. When one box shows bad performance and it cannot be optimized further, it is time to add more boxes. With the help of a Web service dispatcher, the load can be spread across the boxes.

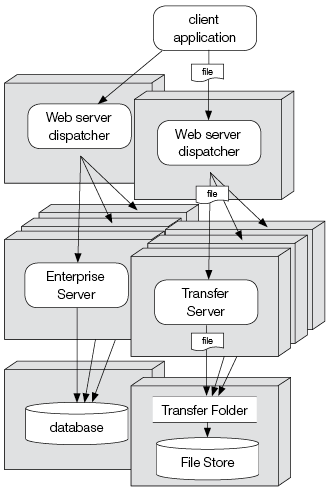

This can be done for Enterprise Server or the Transfer Server, depending on which is the bottleneck. Or it can be done for both, as shown in the following figure.

Figure: A setup in which the load is spread over several machines using dispatchers. Note that Enterprise Server moves files from the File Transfer Folder to the File Store, which is not illustrated.

In this setup, the client application still knows about just one URL for Enterprise Server and just one URL for the File Transfer Server, but now pointing to their dispatchers.

Deploying image preview generation

When a client application uploads an image, Enterprise Server can generate a preview for it. Depending on the image size, file format and compression technology this requires significant processing time and memory usage. When the preview is generated by PHP, the memory is allocated by an Enterprise Server process itself. In case of ImageMagick or Sips, the memory is allocated by those separate processes. Regardless of the process used, the processor and memory resources are always taken from the same physical box that runs Enterprise Server.

It is important to remember that the image preview generation is inseparable from Enterprise Server.

The following figure gives an overview of how preview generation works.

Figure: The process of generating an image preview

A. A user uploads an image.

B. The image is temporarily stored in the File Transfer Folder.

C. The workflow dialog is filled in and the properties are sent to Enterprise Server.

D. Even before the properties are stored in the database, the uploaded native image file is passed into any of the preview generators, either PHP, ImageMagic (IM) or Sips.

E. A preview file is generated.

F. The preview file travels along with the native file to the FileStore to become part of the image creation.

When many images are uploaded and handled by one physical box, it can easily run out of resources leading to unpredictable performance for other users served by the same box. Because it is not possible to offload the preview generation from Enterprise Server, the capacity can be scaled up by the use of a Web server dispatcher whereby Enterprise Server and the preview generator go hand in hand (see Deployment Basics above).

Deploying InDesign Server

There can be several good reasons for giving InDesign Server its own physical box:

- Enterprise Server runs on Linux, while InDesign Server supports Windows and Mac OS X only.

- The write-to-fit and preview features are frequently used by the Multi-Channel Text Editor of Content Station and performance of this editor can therefore become slow.

- The application server becomes too slow for user A while InDesign Server is generating previews for user B.

- Previews are too often not shown because no InDesign Server is available to generate one.

- Enterprise Server itself already runs on multiple physical boxes.

- InDesign Server jobs are flooding the queue.

Enterprise Server is highly scalable and makes it easy to expand, for instance when a large group of users using the write-to-fit and preview features are expected in the near future.

Doing so, Adobe suggests to install one more InDesign Server instance than the number of processors available.

Example: When the server machine has a quad core processor, install 5 InDesign Server instances to get the most optimum performance.

In the figure below, InDesign Server instances are installed on two physical boxes, each with a quad core processor. To address all, start them all with a unique port number and register all these InDesign Servers on the InDesign Servers Maintenance pages in Enterprise Server.

Figure: An installation of multiple instances of InDesign Server spread over two machines

A. The Multi-Channel Text Editor in Content Station requests a preview.

B. Enterprise Server picks an InDesign Server instance and uploads a little JavaScript file (through SOAP) to the idle InDesign Server instance.

C. The JavaScript runs in InDesign Server and talks through the Smart Connection scripting API to perform a login to Enterprise Server, just to get a valid ticket and to get the workflow definition.

D. The layout is retrieved from the FileStore.

E. The retrieved layout is transferred back to the waiting InDesign Server.

F. From the layout, InDesign Server generates a temporary preview file which is written to the Web edit directory.

G. Upon request of Content Station the server reads the preview.

H. The preview is returned to Content Station.

By default, the Web edit directory is relative to the File Store, but if the File Store is too busy, moving the Web edit directory to another physical location avoids InDesign Server interfering with other workflow processes that are downloading (or uploading) files from Enterprise.

Note: Enterprise Server does not support the “Load Balancing and Queueing” (LBQ) add-on component for Adobe InDesign Server. Instead, Enterprise Server takes care of selecting an available InDesign Server instance and assigns a job, such as write-to-fit or preview. In the figure above, InDesign Server #5 has been selected, as indicated with arrow B.

Related Information

The File Transfer Server of Enterprise Server 9

Reference Materials

Comments

0 comments

Please sign in to leave a comment.